Deep learning

using WebGL

Xavier Bourry

- CTO of Jeeliz, a startup specialized in deep learning using WebGL,

- Co-author of Deep Learning in the Browser,

- WebGL Academy - vanilla WebGL/Three.JS interactive tutorials: www.webglacademy.com,

- Email: xavier@jeeliz.com

- Twitter: @xavierbourry

Deep learning using WebGL

- Jeeliz's work

- What is a deep learning network

- WebGL implementation

- WebGL GPGPU tricks

- Compatibility

- Career advice

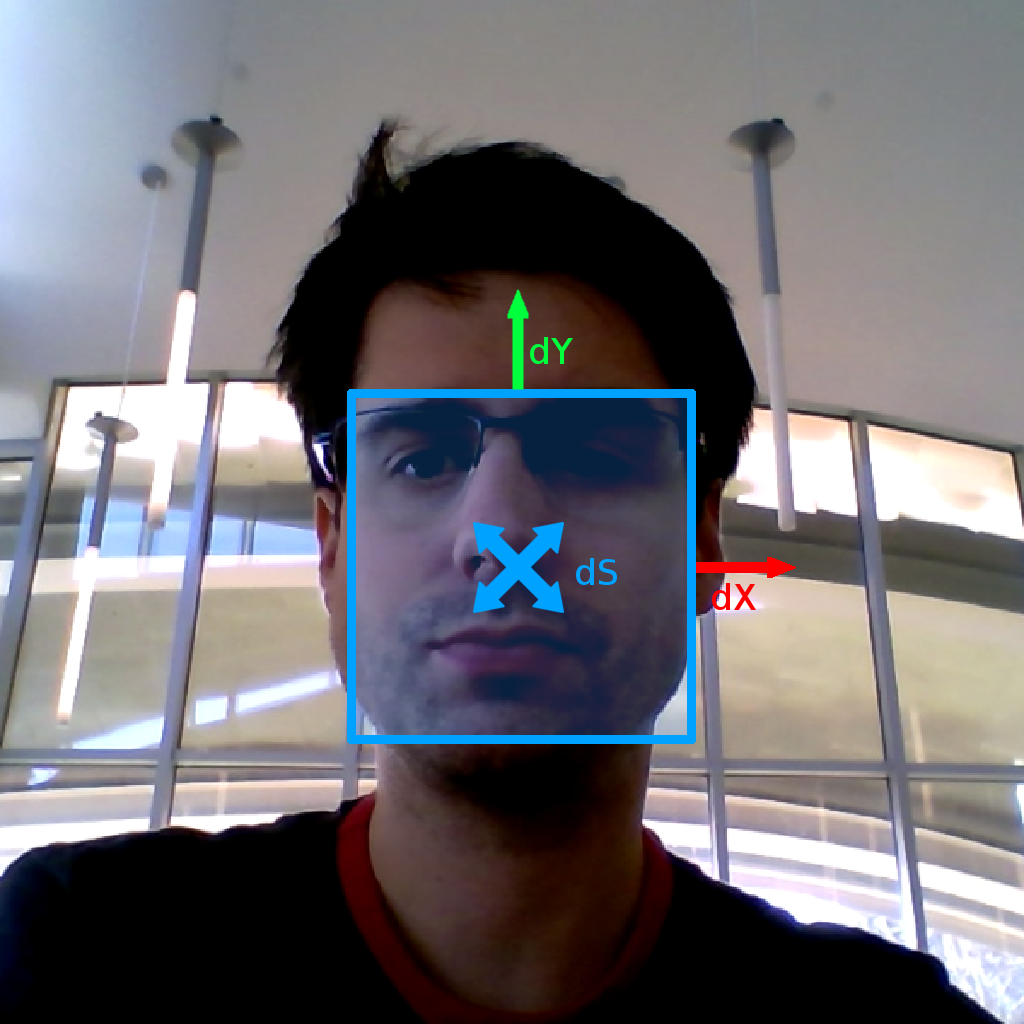

What we do @Jeeliz

- Very fast deep neural networks running client side,

- Most interesting use-case: video processing!

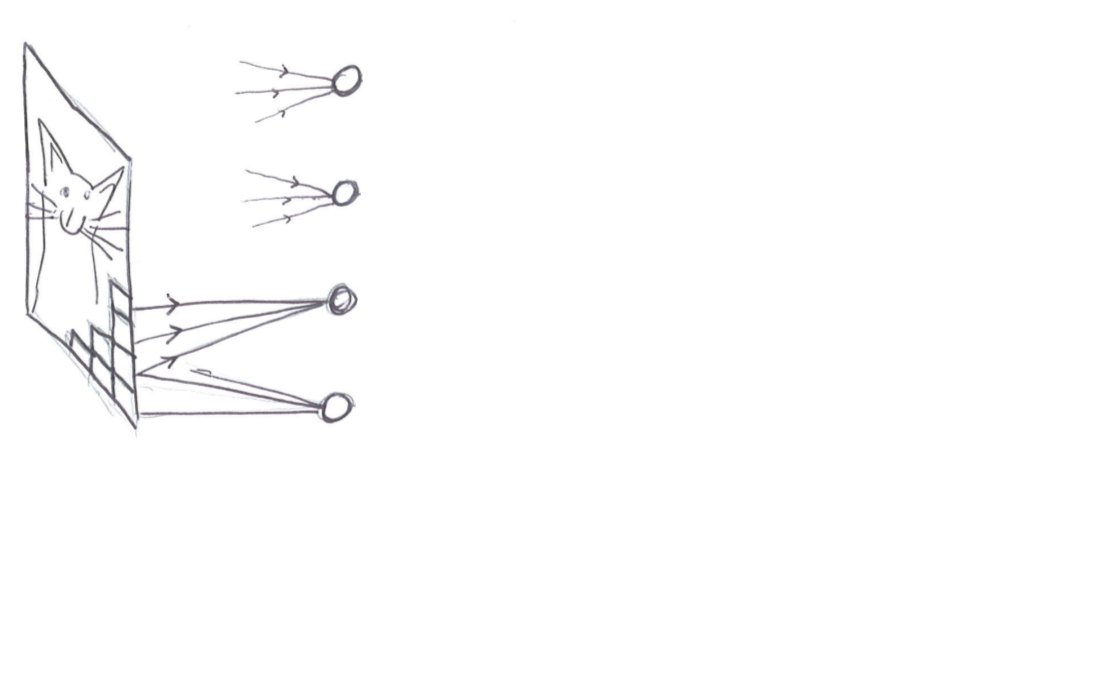

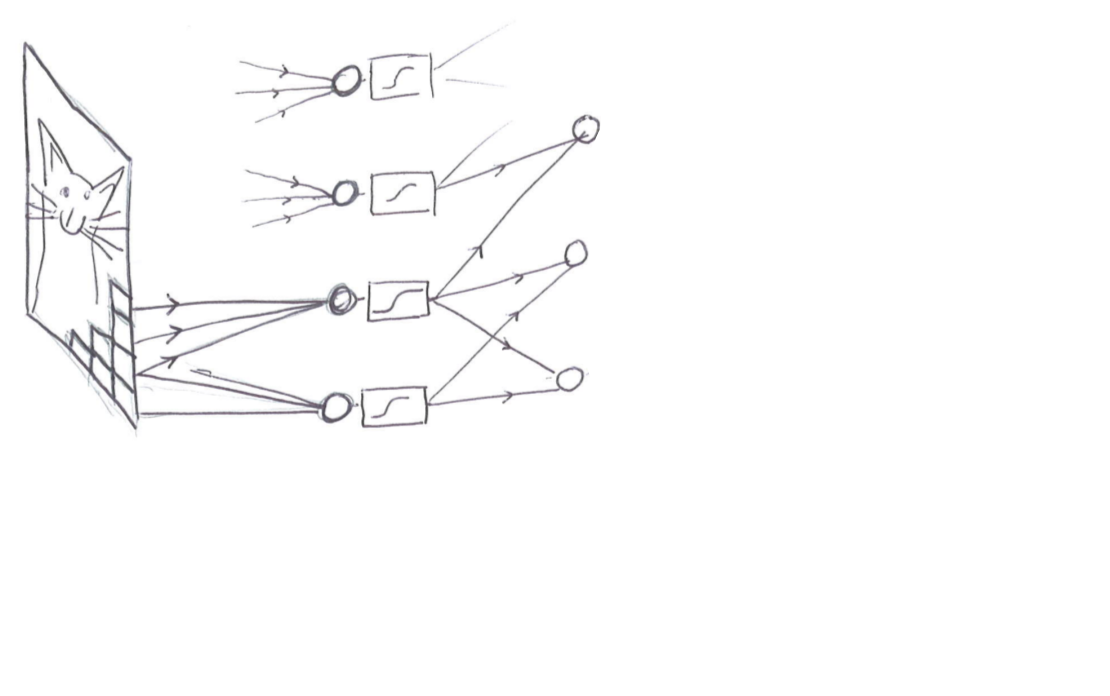

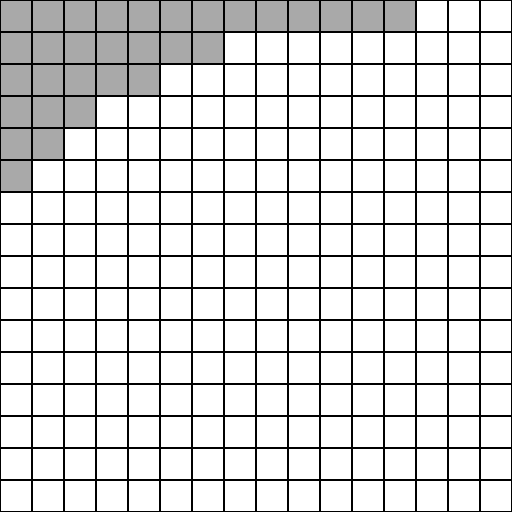

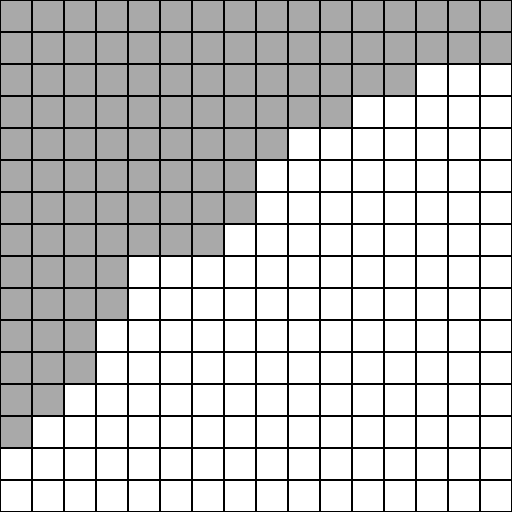

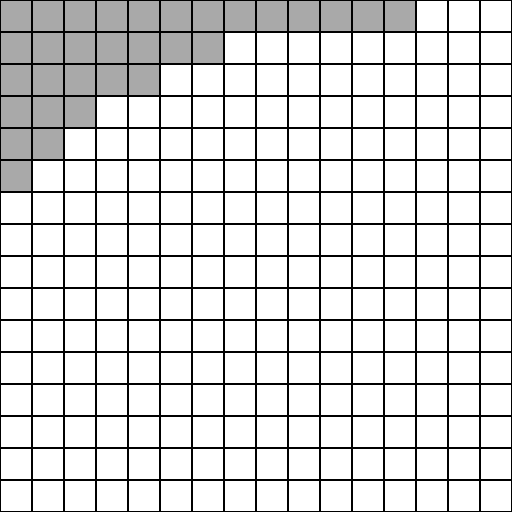

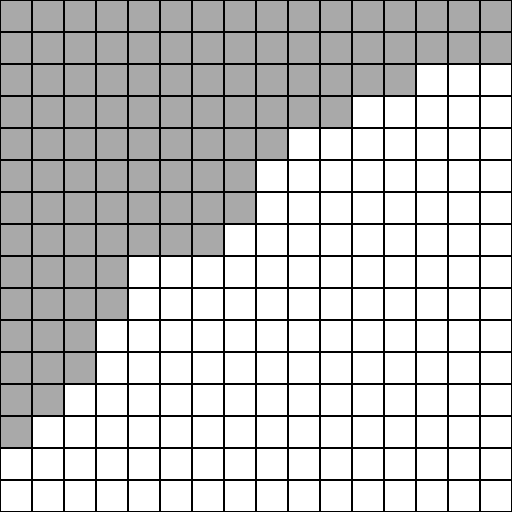

- Input: 64 pixels grayscale image → 64*64 = 4096 components vector,

- Output:

- if the image is a face → detection factor,

- how to move the input detection frame → dX, dY, dS

- the 3D rotation? lighting parameters?

- whatever...

- 60 times per second

Demo time :)

Historical use-case: VTO

Face filters

Build Snapchat like face-filter working in the browser.

Webojis

Expression detection library to build animoji like apps running in the browser.

- Demos:

SVG Cartman, 3D Fox - Demo app: webojis.com

- Github repo

3D Object detection

and tracking

Great for augmented reality!

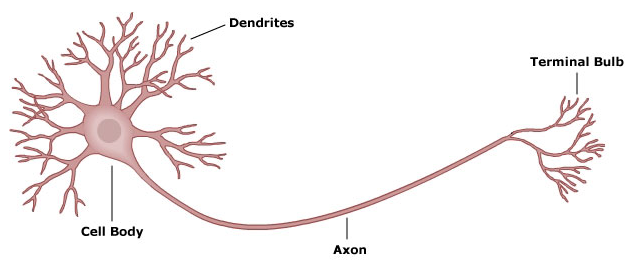

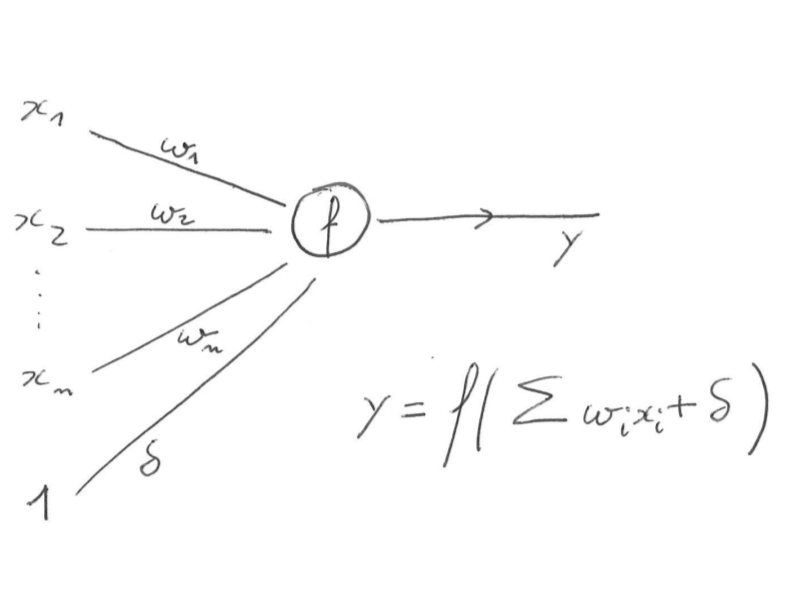

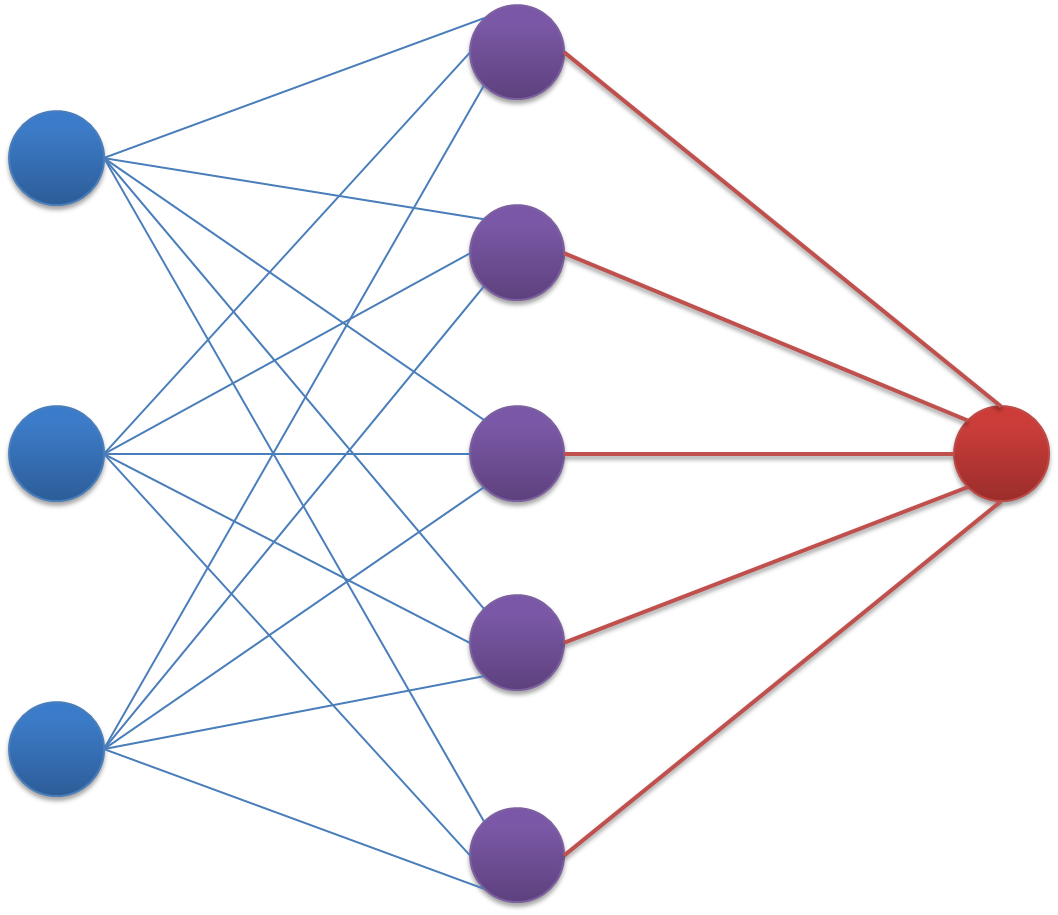

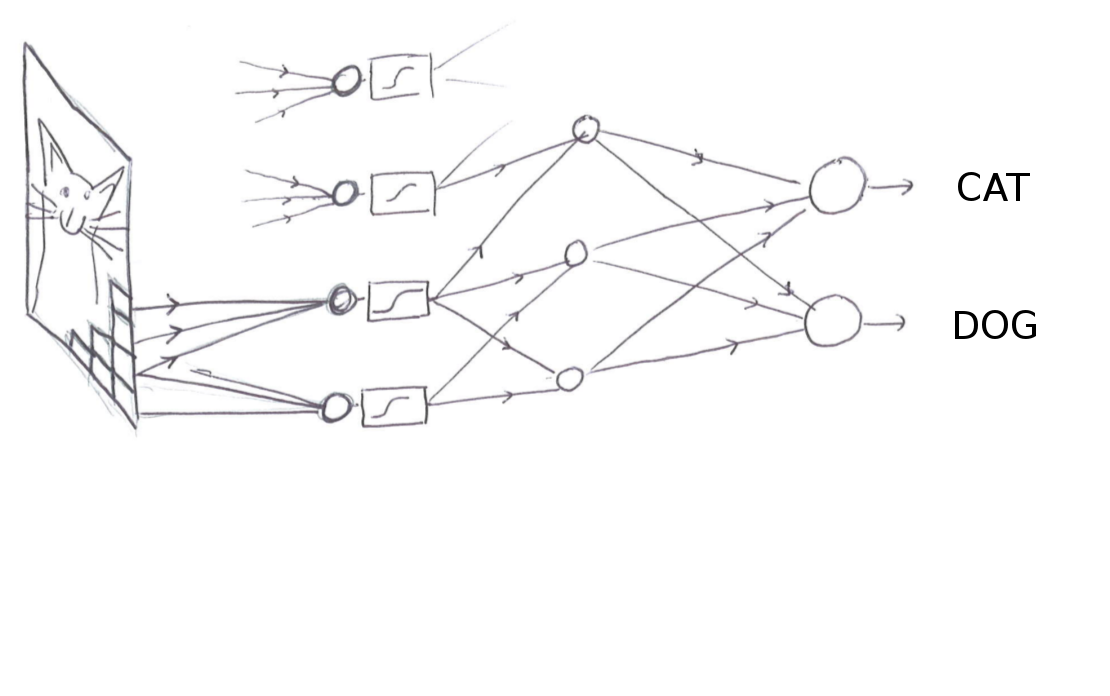

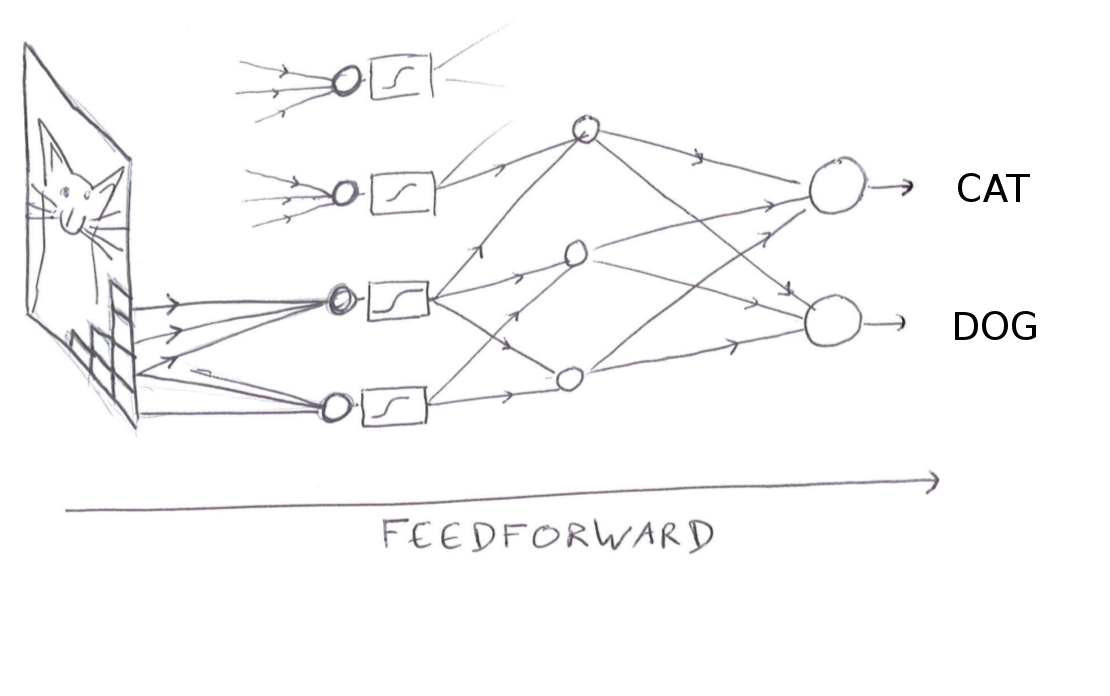

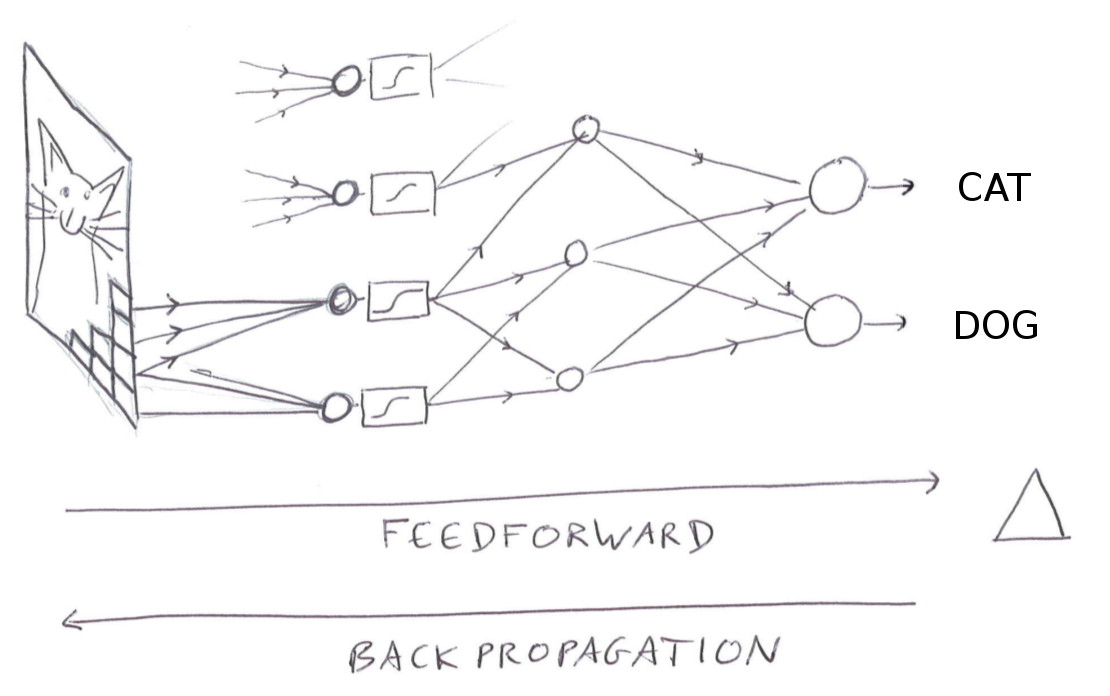

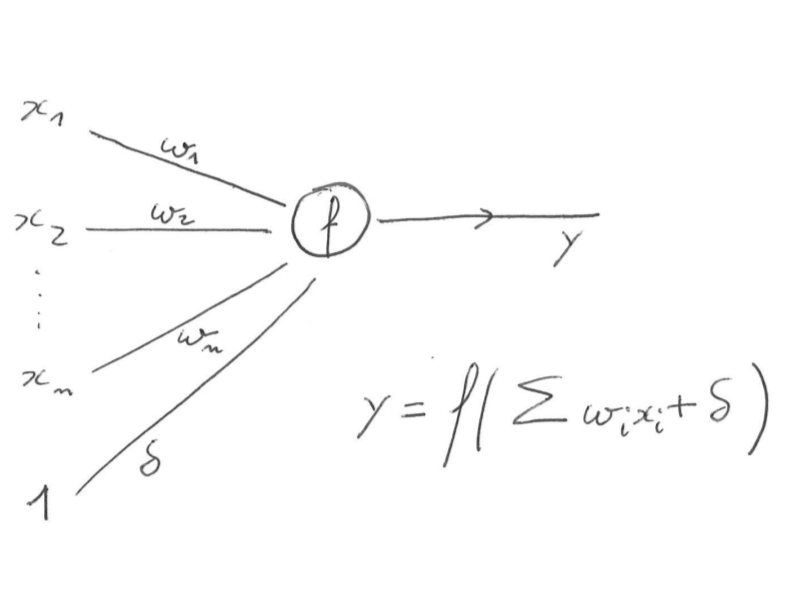

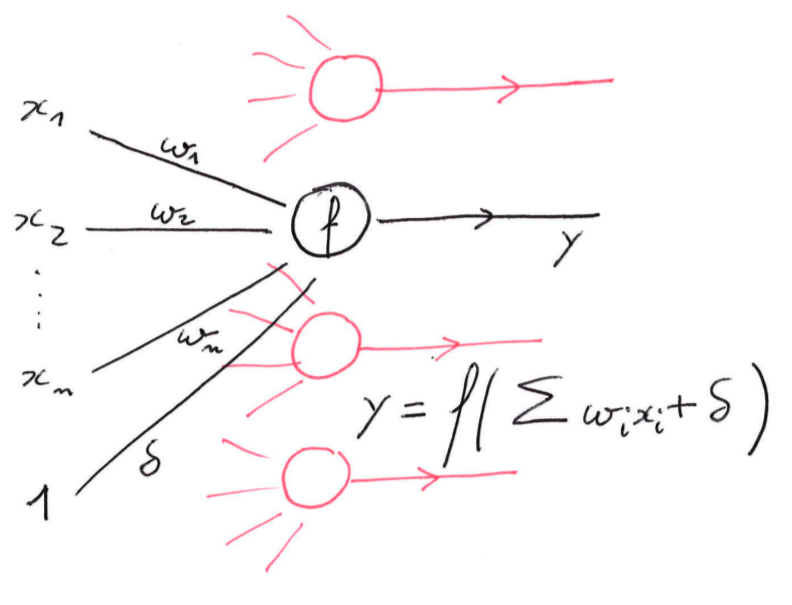

Deep learning networks

WebGL implementation

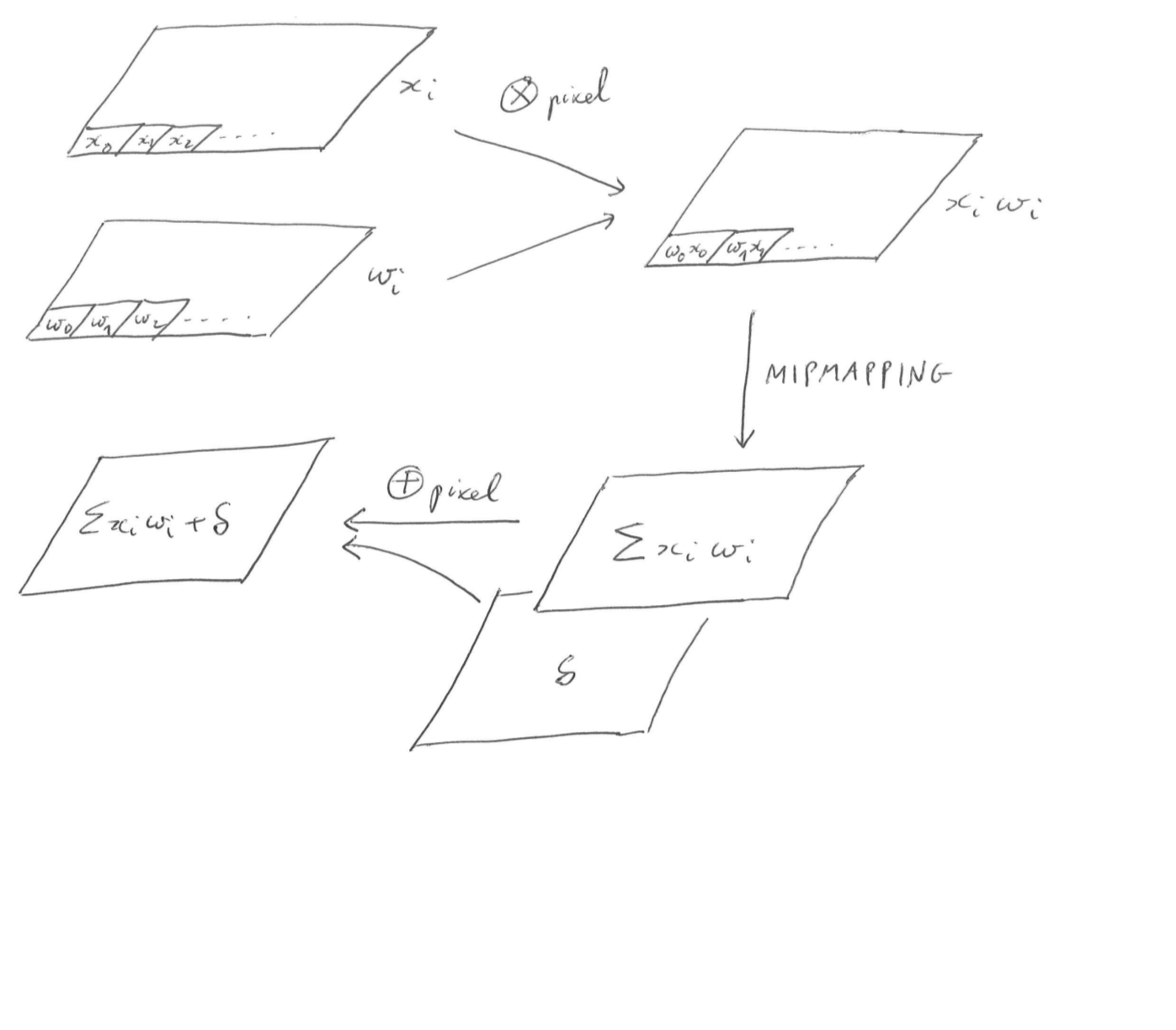

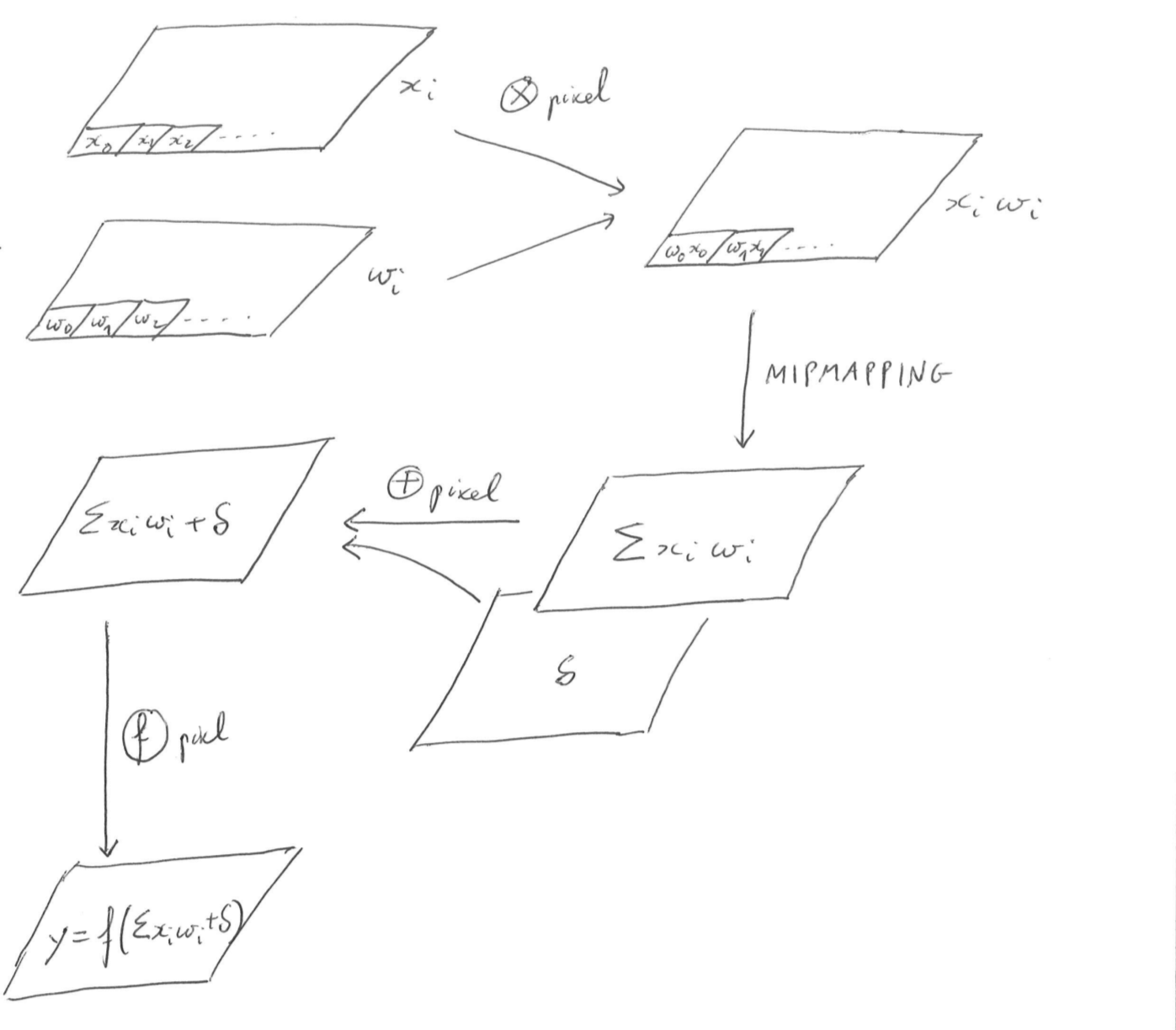

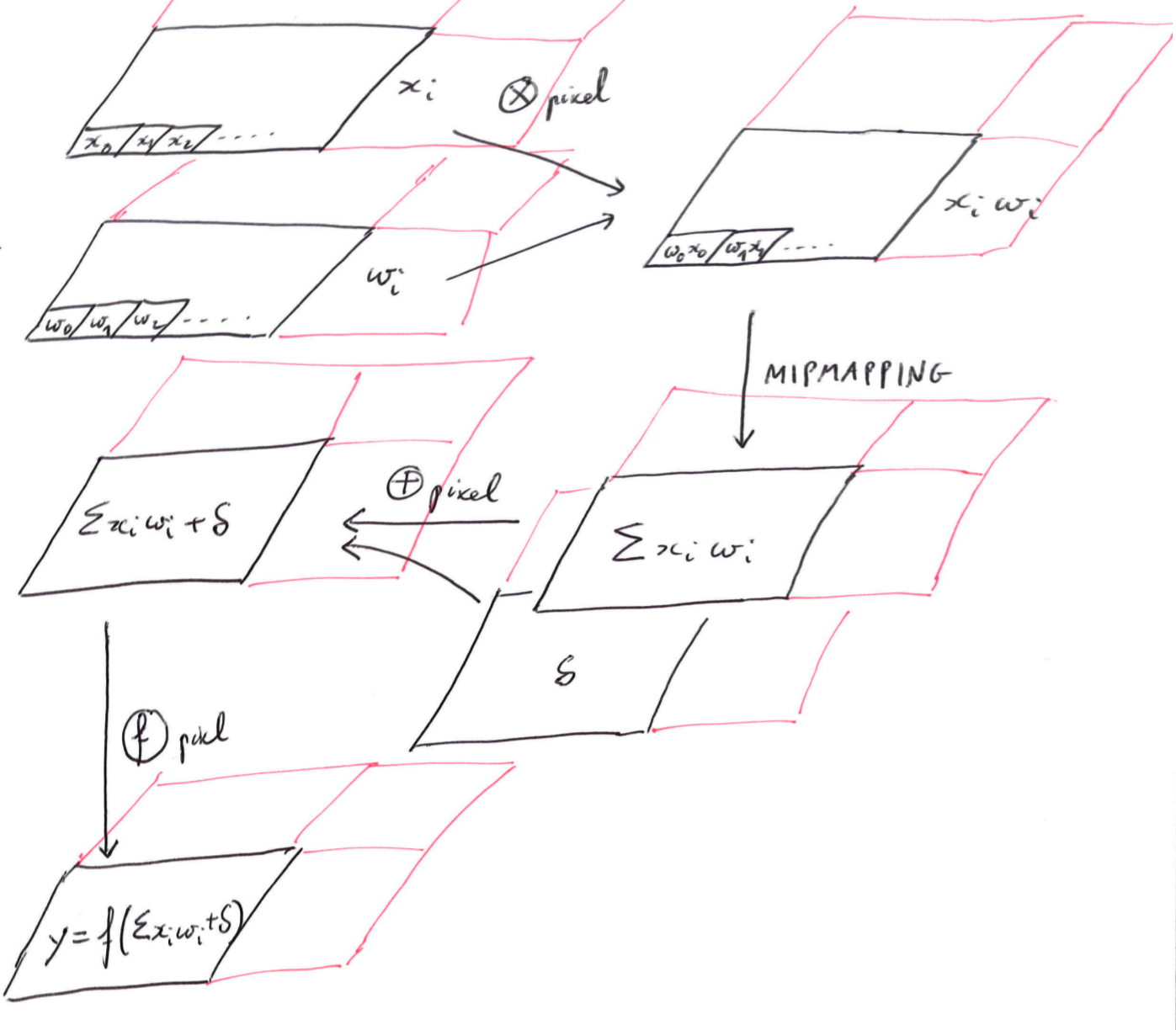

Old school GPGPU

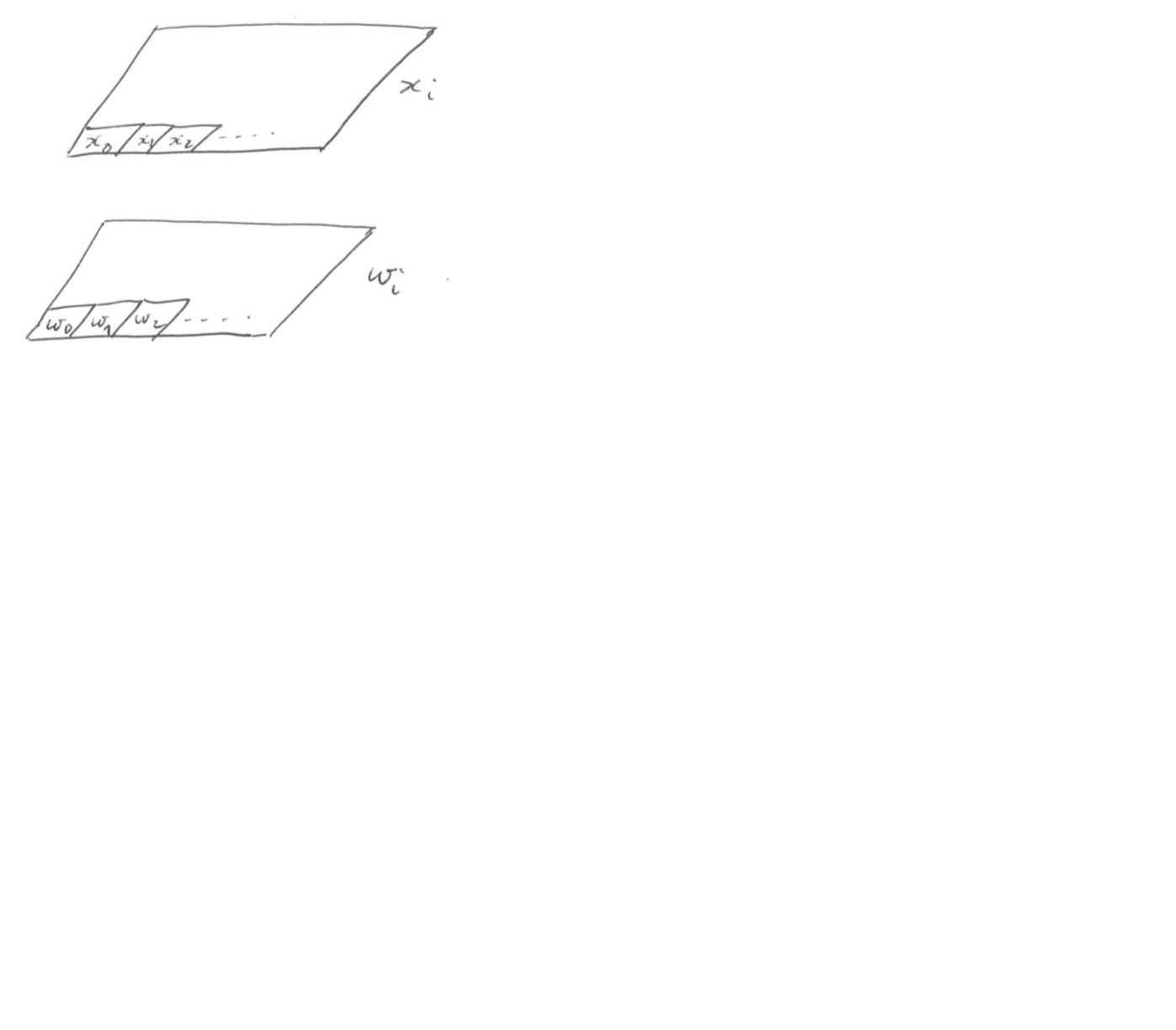

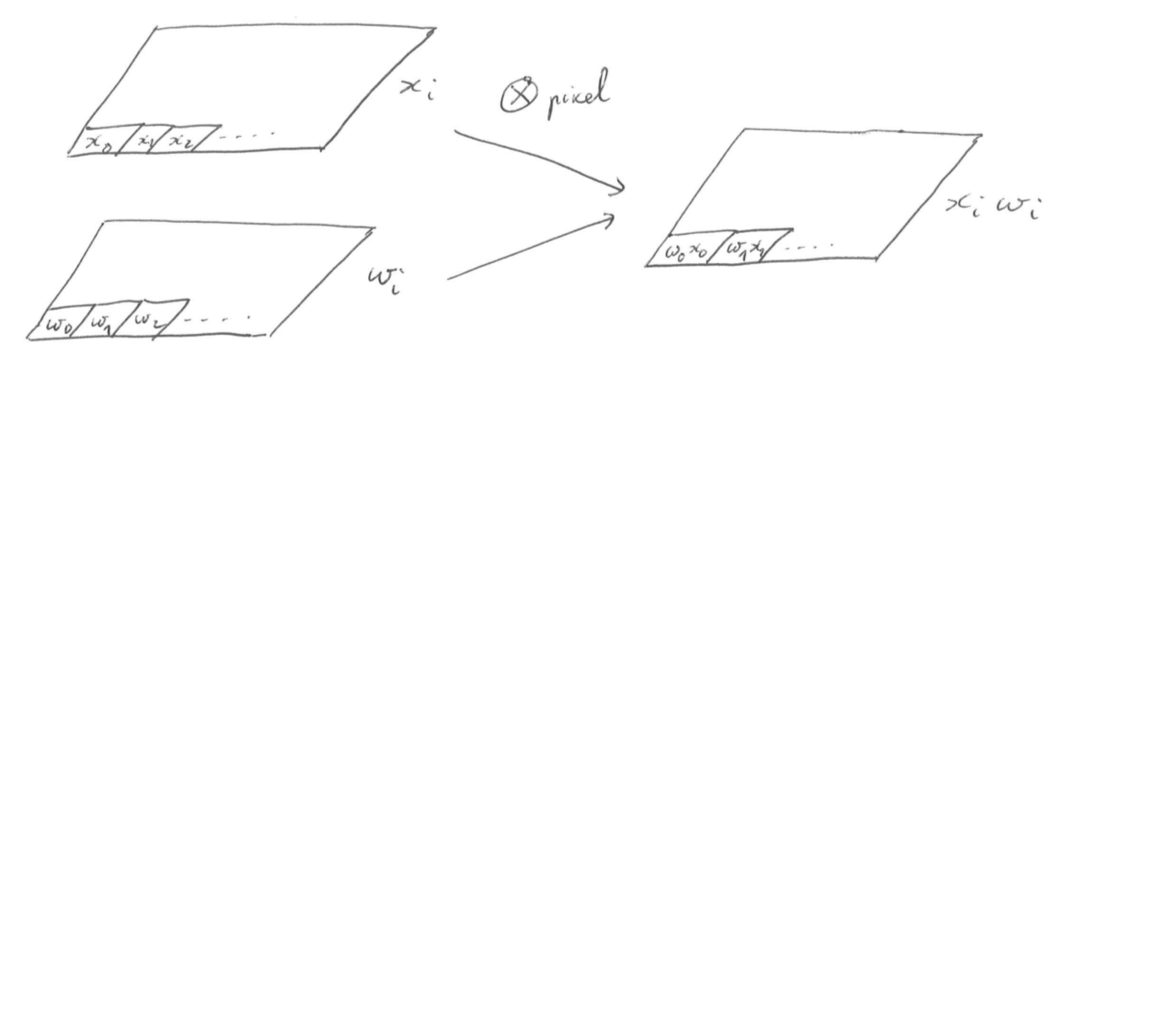

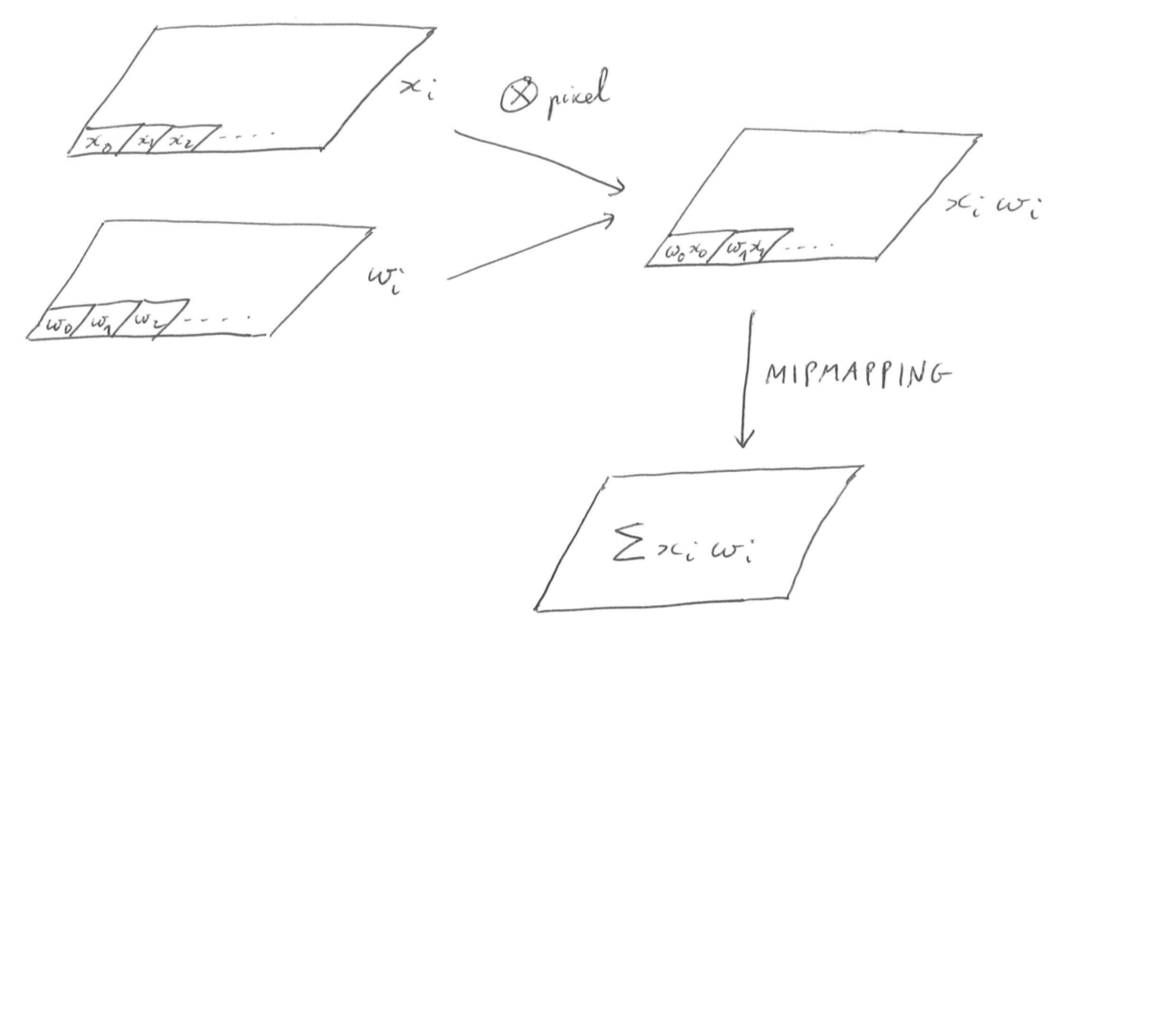

- Data is stored into FLOAT/HALF_FLOAT textures

- Each operation is done into the fragment shader

- The output is stored into a texture too (RTT)

- Can be read on the CPU by

GL.readPixels(...)

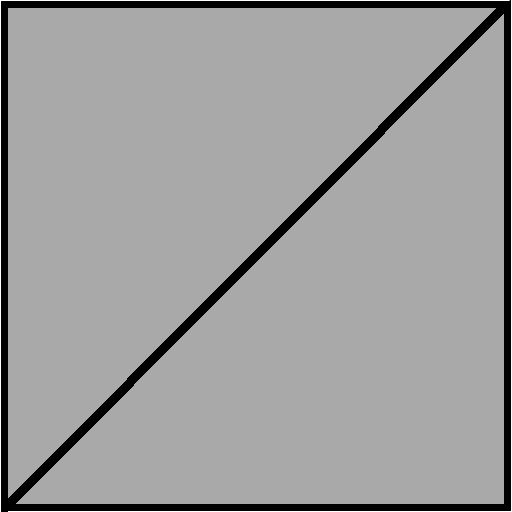

Shaders use

Vertex shader

→ draw 2 triangles

→ draw 2 triangles

Fragment shader

→ pixel per pixel operations

→ pixel per pixel operations

Pros of GPU processing

- Feedforward & backpropagation → sequential processing by layer,

- But Layer processing → per neuron parallelization,

- image processing → many neurons at least for first layers,

- layer parameter = texture (synaptic weights, connectivity, input summed, ...).

WebGL GPGPU tricks

All on the GPU

What happens in GPU stays in GPU.- Avoid

gl.readPixels(), canvas.toDataURL() - There is always a solution to not use the CPU,

- Synchronization CPU/GPU → can be slow...

Read FLOAT/HALF FLOAT TEXTURES

- No

GL.readPixels()with FLOAT/HALF FLOAT textures, - →

gl_FragColorencodes 1 float in its RGBA channels, - → 4 renderings, for RGBA values.

At the training

- Dataset as texture atlas

- Load everything on the GPU once and for all.

Better than images:

3D rendering

Trainer demo

- Set of 3D models + random parameters (rotation, lighting, ...) → image,

- 3D engine wired to the neural network input,

- Like an infinite training set of images,

- → No overfitting

- → No labelling error

The context

- Initialization options:

GL = canvas.getContext("webgl", { antialias: false, preserveDrawingBuffer: true }) - Toggle another stuffs:

GL.disable(GL.DEPTH_TEST); GL.disable(GL.DITHER);

Floats precision

GL.FLOAT→ 32 bitsGL.HALF_FLOAT→ 16 bits, enough for deep learning,GL.UNSIGNED_BYTE→ 8 bits, not enough,- Empirical result: at least 11 bits for deep learning

Floating point specials

highp float → 32 bits:

→ Fmax = 3.402823 × 1038

FP specials values:

+Inf, -Inf, NaN+Inf, -Inf → can disappear (1/Inf=0) but not NaN!

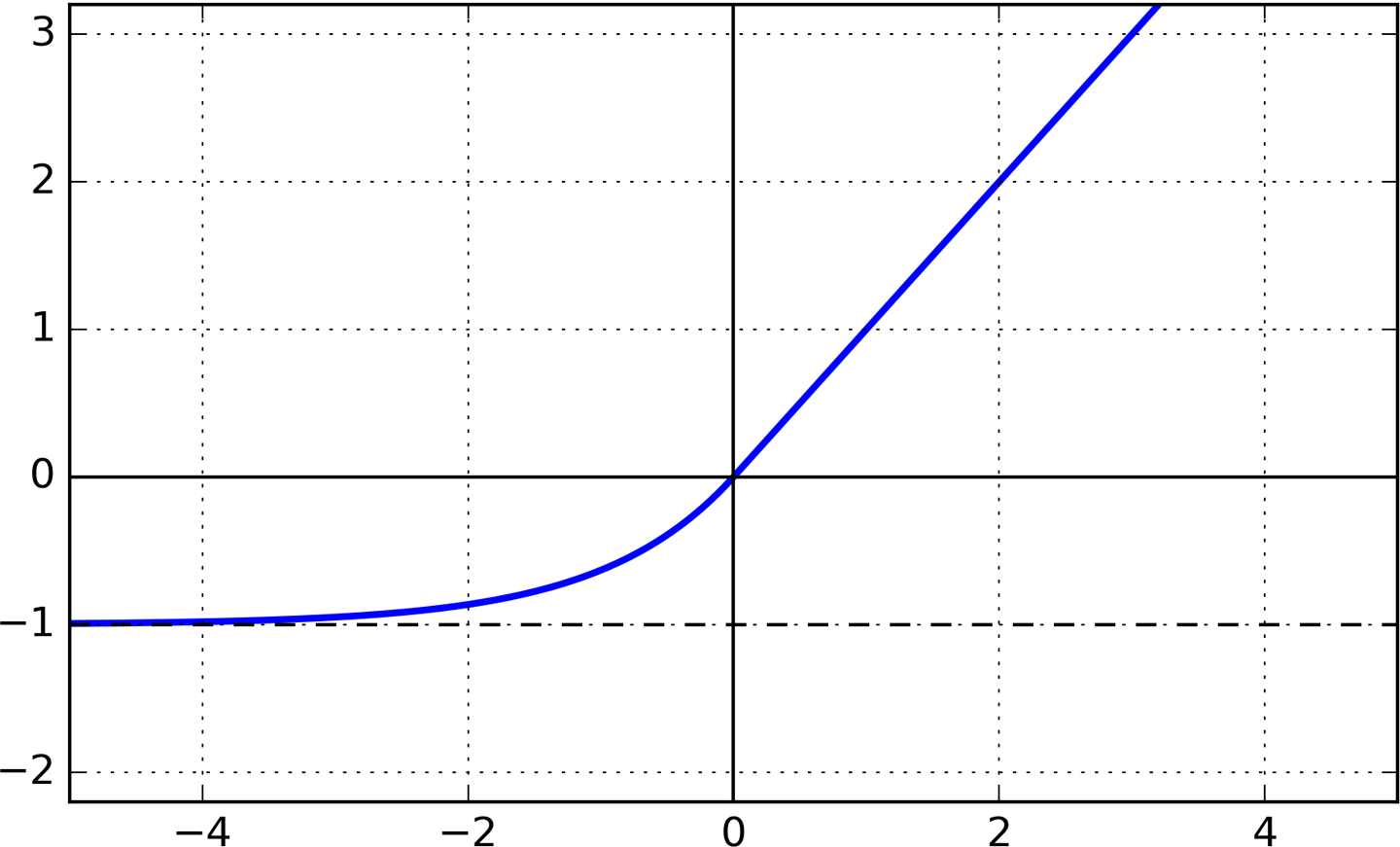

Example

float ELU(float x){

return mix(exp(x)-1.0, x, step(x, 0.));

}

x=100→ELU(100)=100- compute

ELU(100)=

mix(exp(100)-1, 100, 1) = (exp(100)-1) * 0 + 100- But

exp(100) = 2.7.1043 = +Inf

- So

ELU(100) = +Inf * 0 + 100 = NaN + 100 = NaN

Avoiding FP specials

- avoid functions using

exp(), log(), - If

mix()or other GLSL interpolation function is called, make sure that all terms are not FP specialsfloat safe_ELU(float x){ return mix(exp(-abs(x))-1.0, x, step(x, 0.)); } - Majorate or minorate,

- Use L1 and L2 regularization (

w←d*wwith d<1)

Compatibility

GPGPU required capabilities

Required :

- Using float or half float textures,

- Do render to texture with them

Always better :

- Use mipmap on float/half float textures.

WebGL 1 limitations

- Requires

OES_TEXTURE_FLOATorOES_TEXTURE_HALF_FLOATextension, - Better to have

OES_TEXTURE_FLOAT_LINEARorOES_TEXTURE_HALF_FLOAT_LINEAR, - Also ask for

EXT_color_buffer_floatandEXT_color_buffer_half_float, - Test if you can mipmap on float/half float textures,

- Test if you can do Render to Texture on float/half float textures.

WebGL 2 limitations

- Float textures and half float texture are included !

- Render to texture with half float texture is included !

- →you can always do Deep Learning with WebGL2 !!!

- Still requires to enable

OES_TEXTURE_FLOAT_LINEARorOES_TEXTURE_HALF_FLOAT_LINEARfor using mipmapping on float/half float textures.

HALF_FLOAT texture init

OES_TEXTURE_HALF_FLOAT → you can use half float texture.

Problem if initialization from an array:

there is no JS Float16Array() type.

- JS float array → JS

Uint16Array()using binary float format, - If not working (ipad): create a floating point texture, fill it from a JS

Float32Array(), then copy it to the half float texture.

References

Questions ?

xavier@jeeliz.com - @xavierbourry

Career advice

- Main experience: Freelance developer/trainer in Paris between 2011 and 2016 specialized in WebGL/THREE.js,

- Jeeliz: I have already made enough mistakes to give some advice.

Do things for free

- Open-source code repositories,

- tutorials,

- demo applications,

- events,

- blog posts.

Starting a startup

- Associate with different people,

- Project: no bulls***, not too ambitious,

- fits into an existing tech ecosystem,

- release free stuffs to communicate with people of this ecosystem,

- as soon as you can sell something, sell it, even at a cheap price,

- You won't make money on your initial idea.

Questions ?

xavier@jeeliz.com - @xavierbourry